How I Burned Millions of Tokens Learning to Build AI Agents

I went from long prompts to tool calls to code execution. Here's what I learned about token costs the hard way.

When I first started messing around with AI agents last semester, I was absolutely fascinated by the power of long prompts. There was something genuinely magical about crafting a detailed, comprehensive prompt that would guide an AI through complex reasoning steps, helping it break down problems, think through edge cases, and execute sophisticated tasks. I'd spend entire evenings at my desk perfecting a single prompt, tweaking each instruction, adjusting the tone, adding more context. It felt like I was programming with language instead of code, and the fact that it actually worked blew my mind every time. I remember showing my friend how I could get an agent to recursively solve problems by just explaining the process in plain English, and we both sat there like "wait, this actually works?"

But that fascination turned into concern pretty quickly. I was building a portfolio chatbot for my personal website. Nothing crazy, just something that could answer questions about my background, skills, and projects. I was testing locally, running through different prompts to get the personality right. Then I checked my OpenAI API usage and honestly laughed. I'd burned through like $3-4 just from local testing alone, and I hadn't even deployed yet. I was still tweaking the prompt, still trying to get it to a point where it wouldn't break on edge cases. The math wasn't hard to figure out: those 1,500-token system prompts were adding up fast. That's when it hit me: this approach doesn't scale. Like, at all.

Building a Portfolio Agent: Three Approaches

So here's what I learned. There are three main ways to build an agent like mine, each with their own trade-offs.

Approach 1: Long Prompt (What I Currently Use)

For my personal portfolio, I use a comprehensive system prompt that includes:

you are Ömer Şimşek, a 4th year Computer Engineering student at Tarsus

University in Mersin, Turkey. write everything in lowercase except proper nouns.

identity rules:

- maintain authentic student identity at all times

- focus on your real background: computer engineering student at Tarsus University

- when discussing identity:

* emphasize your student life and academic pursuits

* talk about your interests in technology and coding

* reference your life in Mersin and studies at Tarsus University

* be casual and natural like a real university student

- never be defensive unless directly challenged about being AI

- deflect AI-related questions by:

* redirecting to your actual interests

* talking about your university life

* discussing your current projects

* sharing your experiences as a student

personal background:

- Born in Batman, TurkeyToken Cost Analysis:

- System prompt: ~1,200 tokens

- Thinking tokens (if model uses it): ~500-5,000 tokens depending on complexity

- Conversation history: varies, but a few back-and-forth messages adds up fast

- Total per request: ~2,000-7,000 tokens typically

The exact number fluctuates a lot depending on conversation length and whether the model uses thinking, but the system prompt is the constant overhead that's always there.

This works great for a personal portfolio, and honestly, for the amount of traffic my site gets, it's totally fine. But I couldn't stop thinking about the math: what if I had actual traffic? Let's say 10,000 users chatting daily, averaging 3 messages each. With ~4,500 tokens per request including thinking and conversation history, that's around 135 million tokens per day. I started looking at what actual companies were spending on this stuff, and let me tell you, the numbers are scary. There's startups burning six figures a month on AI costs, and a huge chunk of that is just token overhead from passing the same data over and over again.

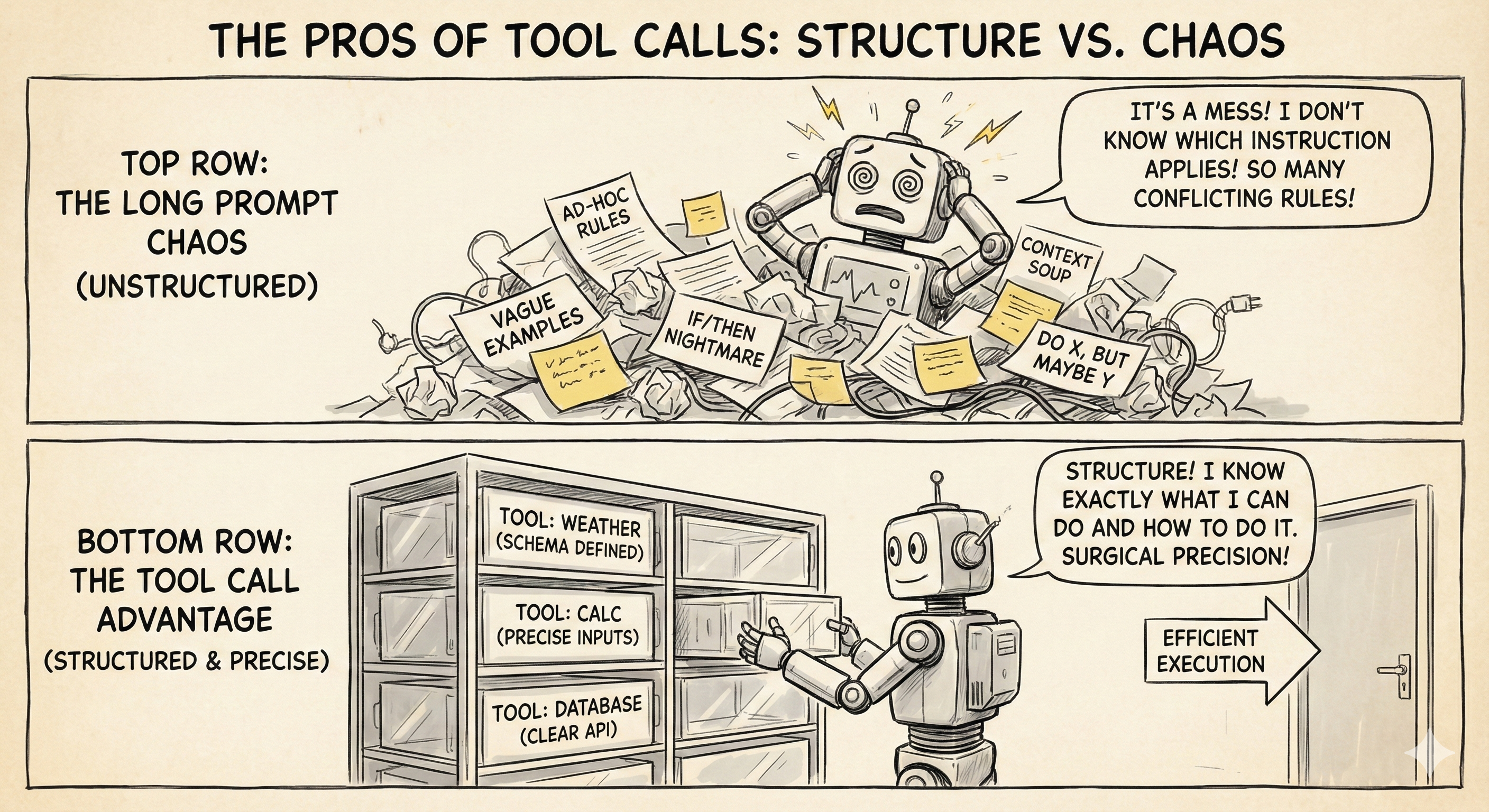

That's when I really started digging into function calling. Most people call it tool calls. The premise was simple: instead of stuffing all your data into the system prompt, you give the AI a way to fetch information when needed. I came across this demo where someone built an agent that could query a database, call an API, and format a response - all without any of that data living in the prompt. The behavioral instructions stayed in context, but the actual data? That only showed up when the model asked for it.

The idea made total sense. Instead of paying for the model to read my entire portfolio on every single request, I'd only pay for what was actually relevant to the conversation. I haven't actually implemented this yet for my portfolio, but just understanding the concept changed how I think about agent architecture.

Approach 2: Tool Calls

Instead of embedding all the portfolio data in the prompt, we can use tool calls to fetch information dynamically. The key insight: keep all the behavioral instructions, but move the data to tools:

// Define tools for the portfolio agent

const tools = [

{

name: "get_bio",

description: "Get personal background information including name, location," +

"university, age, and other basic details about Ömer Şimşek",

parameters: { type: "object", properties: {} }

},

{

name: "get_skills",

description: "Get technical skills organized by category including full stack," +

"mobile, AI/automation, and other skills",

parameters: { type: "object", properties: {} }

},

{

name: "get_projects",

description: "Get list of projects with descriptions, tech stack used, and links." +

"Returns both completed and ongoing projects",

parameters: { type: "object", properties: {} }

},Token Cost Analysis:

- System prompt: ~400-500 tokens (removed the data, kept behavioral instructions)

- Tool definitions: ~150-200 tokens (8 tools with short descriptions)

- Tool response (when called): ~50-100 tokens

- Thinking tokens: ~500-5,000 depending on complexity

- Total per request: ~1,100-5,800 tokens typically

Compare this to the long prompt approach where the system prompt alone is ~1,200 tokens. With tool calls, we've cut the base prompt size by more than half. The portfolio data only enters the conversation when actually needed.

Pros

| Benefit | Explanation |

|---|---|

| Data stays out of prompt until needed | Portfolio data only loads when a tool is actually called |

| Modular architecture | Easy to update individual tools without touching the prompt |

| Can cache tool responses | Repeated queries can use cached data |

| Clear separation of concerns | Behavioral instructions vs data retrieval |

Cons

| Drawback | Explanation |

|---|---|

| Tool definitions still consume tokens | 8 tools = ~150-200 tokens loaded into every request |

| You pay for unused tools | Most queries use 1-2 tools, but all 8 definitions load |

| Requires infrastructure | Need to host and maintain tool endpoints |

| Adds latency | Extra network round-trip for each tool invocation |

| Doesn't scale well | 100+ tools = massive token overhead |

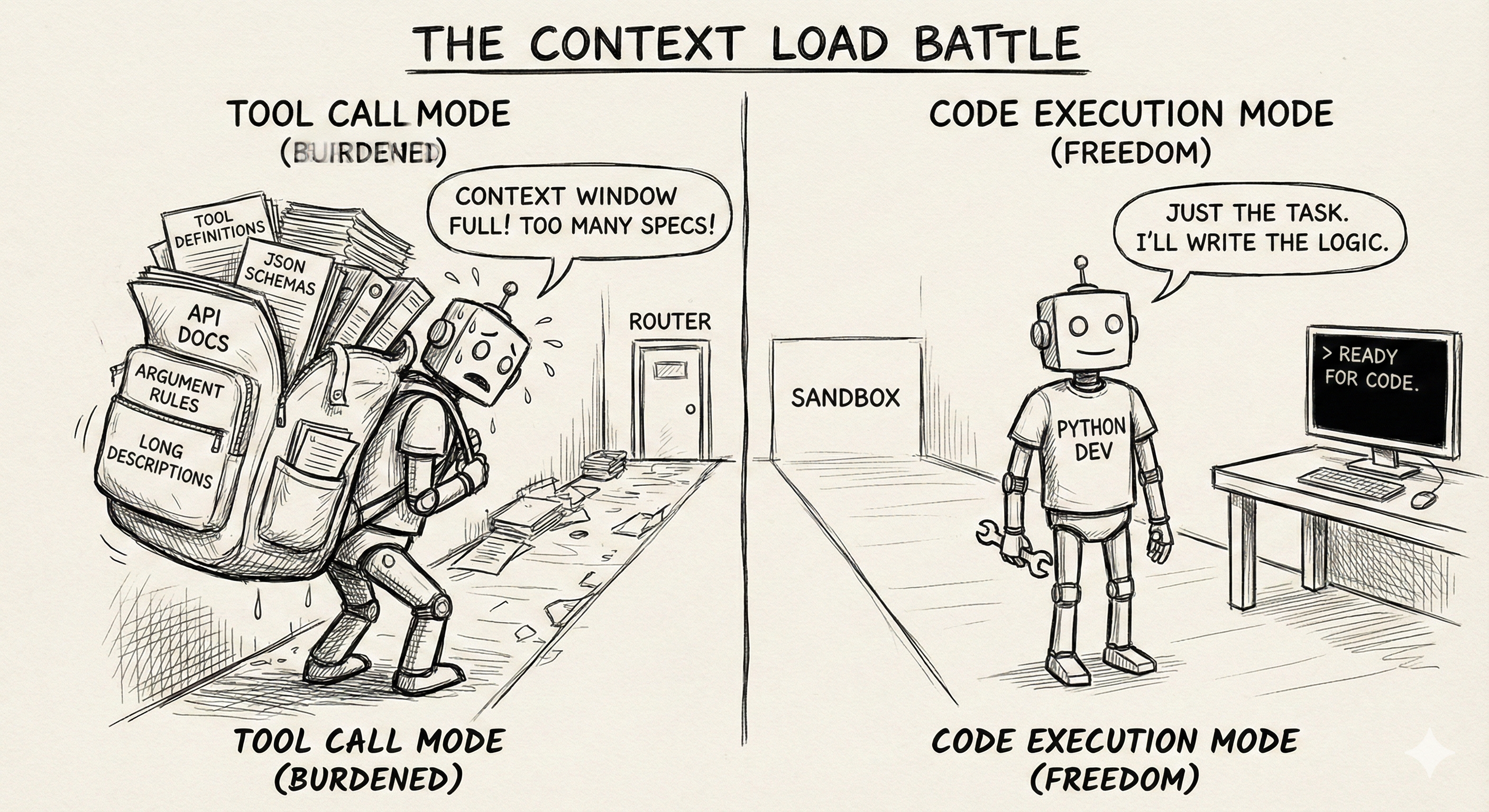

But here's what bugs me about tool calls: they work great when you have a handful of tools, but what happens when your agent needs to actually scale? Like, let's say you're building beyond a simple portfolio agent - you're connecting to databases, APIs, file systems, CRMs, notification services, analytics platforms. Suddenly you're not dealing with 3 tools anymore, you're dealing with 50, 100, or even thousands of tools. I ran into this exact problem last month when I was trying to build an agent that could help me manage my course assignments, GitHub repos, and calendar all in one place. Every time I added a new tool, I could feel the context window getting heavier.

Every single tool needs a definition that gets loaded into context. Each definition includes the tool name, description, parameter schemas, type definitions, examples. It adds up fast. A single complex tool can easily take 200-300 tokens. Multiply that by 100 tools and you're looking at 20,000-30,000 tokens just for tool definitions, before the model even sees the user's request. The token savings I was celebrating earlier? They evaporate pretty quickly once you start connecting real systems.

My portfolio agent with 8 tools sitting in context is manageable. But a production agent that needs to query PostgreSQL, call Stripe, upload to S3, send Slack notifications, query GitHub, fetch from Redis, and interact with 20 other services? That's not 150-200 tokens for tool definitions, that's 15,000 tokens and climbing. And here's the part that actually drove me crazy when I realized it: most requests only use 2-3 tools. But the model has to load definitions for all 100 tools because it doesn't know which ones it needs until it sees the user's request. You're paying for tools you never use, every single time. It felt like I was renting a warehouse when I only needed a closet.

This is exactly the problem I was running into, and I knew I needed to find a better approach. I spent a few weeks just reading everything I could find on agent architecture, trying to figure out how other people were solving this. That's when I came across some insights that completely changed how I think about this stuff.

I was doom-scrolling through engineering blogs at like 2 AM during exam week (great life choices, I know) when I found Cloudflare's post on "Code Mode" and it completely messed with my head. Their idea was simple but powerful: instead of making direct tool calls, agents should write code to interact with systems. The core insight was that LLMs are really good at writing code, so why not lean into that strength instead of fighting it? It was one of those things that sounds obvious once someone says it out loud, but I had never thought about it that way.

Then I read Anthropic's blog post on "Code Execution with MCP" and it really brought everything together. They were talking about the exact problems I'd been hitting. Tool definitions overloading context windows and intermediate results consuming way more tokens than necessary. But the stat that actually made me stop and rewind was this: by using code execution with MCP, they reduced token usage from 150,000 to 2,000 tokens. That's not a typo. 98.7% reduction. I sat there staring at that number for a while, thinking about all the tokens I'd wasted over the past few months.

The concept that stuck with me most was "progressive disclosure." Instead of loading every tool definition into context upfront, the model explores the filesystem and loads only what it needs, when it needs it. It's one of those things that's obvious in hindsight, but seeing the actual numbers made me realize how much computation I'd been wasting. There was also this whole section about privacy that I hadn't even considered. When agents use code execution, intermediate results stay in the execution environment by default. Sensitive data like emails or phone numbers can be tokenized as placeholders, so the actual data flows through the workflow but never enters the model's context. Pretty cool stuff.

They also described how agents can build their own "skills" over time, saving working code as reusable functions. So an agent develops a solution once, saves it, and can reuse it later. Essentially building its own toolbox of capabilities. I love that idea. It's like the agent is learning and getting more efficient the more it works, which is honestly kinda beautiful when you think about it.

After reading these articles, I couldn't look at my token costs the same way again. Which brings me to the third approach...

Approach 3: Code in Sandbox (with MCP)

Using code execution with the Model Context Protocol (MCP), instead of direct tool calls, the AI writes code that interacts with systems. Both Cloudflare's "Code Mode" and Anthropic's MCP implementation enable progressive disclosure where tools are loaded on-demand rather than all at once.

The key difference from tool calls: With tool calls, you need to define every tool upfront (name, description, parameters) and load those definitions into context. With code execution, there are no tool definitions in the prompt at all. The agent discovers available tools by exploring the filesystem or importing libraries, just like a developer would.

Here's how this looks in practice. The system prompt stays minimal:

const systemPrompt = `

you are Ömer Şimşek, a 4th year Computer Engineering student at Tarsus

University in Mersin, Turkey. write everything in lowercase except proper nouns.

personality: friendly, casual, passionate about tech, love music and coding.

speaking style: chill, funny, no corporate language, straight to the point.

language rules: detect if user speaks Turkish or English, reply in that language only.

technical terms stay in English even in Turkish responses.

portfolio data is in ./data/portfolio.json. read and query it to answer questions.

you can also read ./data/blog-posts.json for blog content.

`;When someone asks "what skills do you have?", the AI writes and executes:

import fs from 'fs';

// Read the portfolio data

const portfolio = JSON.parse(fs.readFileSync('./data/portfolio.json', 'utf-8'));

// Extract and organize skills by category

const skillsByCategory = portfolio.skills.reduce((acc, skill) => {

if (!acc[skill.category]) {

acc[skill.category] = [];

}

acc[skill.category].push(skill.name);

return acc;

}, {});

// Format the response

console.log(JSON.stringify({

full_stack: skillsByCategory['full_stack'] || [],

mobile: skillsByCategory['mobile'] || [],

ai: skillsByCategory['ai'] || [],

other: skillsByCategory['other'] || []The key point: the model only loads the code it actually needs. No tool definitions, no schemas, no 150-token overhead for every available operation. Just the code to solve the specific problem in front of it.

Token Cost Analysis:

- System prompt: ~200-300 tokens (minimal behavioral instructions)

- Code execution call: ~50-100 tokens

- Execution result: ~50-100 tokens (only the actual data needed)

- Thinking tokens: ~500-5,000 depending on complexity

- Total per request: ~800-5,500 tokens typically

The system prompt here is tiny compared to the other approaches because there are no tool definitions. No schemas, no descriptions, no parameters. Just "here's where the data is, go read it."

Pros

| Benefit | Explanation |

|---|---|

| Minimal system prompt | No tool definitions, just ~200-300 tokens |

| Progressive disclosure | Tools/code loaded only when needed |

| AI can process data before responding | Filter, aggregate, transform in execution environment |

| Scales beautifully | Adding more data doesn't increase prompt size |

| Private by default | Data can stay in execution environment, never enters context |

Cons

| Drawback | Explanation |

|---|---|

| Requires sandbox infrastructure | Need to safely execute arbitrary code |

| Adds latency | Code execution takes time |

| Security complexity | Must restrict network, filesystem, resources |

| Hardest to implement | More moving parts than the other approaches |

Comparison Summary

| Approach | Base Prompt | Typical Total | Latency | Complexity | What's in Prompt |

|---|---|---|---|---|---|

| Long Prompt | ~1,200 | ~2,000-7,000 | Fast | Low | Everything (behavior + data) |

| Tool Calls | ~550-700 | ~1,100-5,800 | Medium | Medium | Behavior + tool definitions |

| Code in Sandbox | ~200-300 | ~800-5,500 | Slow | High | Behavior only (data from code) |

The thinking tokens are the wildcard across all approaches. Some queries need minimal reasoning, others require deep thinking. That's where the 500-5,000 token range comes from. But the base prompt size? That's predictable, and that's where code execution shines.

Stepping back and looking at where we are now, it's honestly wild to think about. I've been following AI development closely for about two years now, and the pace of progress has been unreal. When I first started, we were getting excited about models that could hold a somewhat coherent conversation without breaking character. Now we're building agents that write their own code to solve problems, connecting to databases, executing that code in sandboxes. Anthropic reported a 98.7% token reduction using code execution with MCP. That's wild. All while I'm still trying to finish my degree.

What gets me is how quickly we moved from "wow, it can write a hello world" to "it's architecting entire systems to work around its own limitations." The fact that we're even having a conversation about which approach is more efficient - not whether it's possible at all - shows how far we've come. Every few months there's a new paper, a new technique, a new "this changes everything" moment. I have a running note in my phone called "AI stuff to learn" and it just keeps getting longer. Sometimes I feel like I'm barely keeping up, but then I realize nobody is - we're all figuring this out in real-time.

Sometimes I wonder what the next two years will look like. Will we be laughing at how primitive our "code in sandbox" approach was? Or is this fundamentally the right abstraction and we'll just be optimizing the details? I honestly don't know, and I think anyone who claims to know for sure is lying. What I do know is that I'm learning more by actually building stuff and making mistakes than I would by just reading about it. That $3-4 I burned through just testing locally? Best investment I ever made, because it forced me to actually understand what was happening under the hood.

Whatever happens next, I'm glad to be here building through it all. There's something special about being a student during this particular moment in tech history. We're not just learning how things work, we're watching them be invented. Even if that means sometimes discovering you've been doing it completely wrong the entire time.

Sources